In machine learning, we have supervised learning on one end and unsupervised learning on the other end. Support Vector Machines (SVMs) are supervised learning models used to analyze and classify data. We use machine learning algorithms to train the machines. Once we have a model, we can classify unknown data. Let’s say you have a set of data points and they belong one of the two possible classes. Now our task is to find the best possible way to put a boundary between the two sets of points. When a new point comes in, we can use this boundary to decide whether it belongs to class 1 or class 2. In real life, these data points can be a set of observations like images, text, characters, protein sequences etc. How can we achieve this in the most optimal way?

In machine learning, we have supervised learning on one end and unsupervised learning on the other end. Support Vector Machines (SVMs) are supervised learning models used to analyze and classify data. We use machine learning algorithms to train the machines. Once we have a model, we can classify unknown data. Let’s say you have a set of data points and they belong one of the two possible classes. Now our task is to find the best possible way to put a boundary between the two sets of points. When a new point comes in, we can use this boundary to decide whether it belongs to class 1 or class 2. In real life, these data points can be a set of observations like images, text, characters, protein sequences etc. How can we achieve this in the most optimal way?

What’s the big deal? Is it so difficult to put a line between two sets of points?

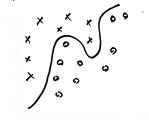

Consider the image shown here. If you are asked to draw a straight line between the crosses and circles, how will you do it? It’s not possible because these points are not linearly separable. Two sets of points are said to be linearly separable if you can separate them using a single straight line. So to separate the two sets of points, you will draw a curved line as shown in the picture. Simple enough, right? Now the problem is that this curviness will increase a lot when you have more points. Defining such a function mathematically becomes more difficult. When the dimensionality of each point increases, defining this boundary will become increasingly complex. Hence we need a way to transform these points somehow so that we can just draw a simple straight line to separate them.

Consider the image shown here. If you are asked to draw a straight line between the crosses and circles, how will you do it? It’s not possible because these points are not linearly separable. Two sets of points are said to be linearly separable if you can separate them using a single straight line. So to separate the two sets of points, you will draw a curved line as shown in the picture. Simple enough, right? Now the problem is that this curviness will increase a lot when you have more points. Defining such a function mathematically becomes more difficult. When the dimensionality of each point increases, defining this boundary will become increasingly complex. Hence we need a way to transform these points somehow so that we can just draw a simple straight line to separate them.

What are Support Vector Machines?

SVMs are learning models which rely on support vectors get the most optimal boundary. Now what are support vectors? If you carefully look at the image above, the curved line only depends on the points closest to it. As in, while drawing the curvy line, you will not think about the point on the top left or the bottom right. You were only concerned about the points in one class closest to the other class. They are like the weakest links here and they decide where you draw the line. These are called support vectors. The word “vectors” is another name for these points in higher dimensions. It is a general term used to describe any data point. Hence the vectors which support the boundary and help us decide where the boundary should be are called support vectors. The learning models which use support vectors are called Support Vector Machines.

The curvy line in higher dimensions is equivalent to something called hyperplane. Support vectors are the data points that lie closest to the decision surface (the decision surface is the curvy line in our case). These points are the most difficult to classify. SVMs maximize the margin around the separating hyperplane. Consider the image shown here. If we want to draw a line between the two sets of points, we can just draw a straight line. All four lines in each of the four figures on the right are correct. They correctly divide the two sets of points. But the one on the bottom right is the most optimal division because it is equidistant from the support vectors in both the classes. This is what SVMs aim for.

The curvy line in higher dimensions is equivalent to something called hyperplane. Support vectors are the data points that lie closest to the decision surface (the decision surface is the curvy line in our case). These points are the most difficult to classify. SVMs maximize the margin around the separating hyperplane. Consider the image shown here. If we want to draw a line between the two sets of points, we can just draw a straight line. All four lines in each of the four figures on the right are correct. They correctly divide the two sets of points. But the one on the bottom right is the most optimal division because it is equidistant from the support vectors in both the classes. This is what SVMs aim for.

Is there a way to take care of the curviness problem?

Consider the image shown here. If you are asked to draw a line to separate the red points from the blue points, you will draw a line as shown in the figure. But we don’t want to draw a curvy line anymore, hence we need to transform them to a higher dimension so that it is easy to separate them. We use a simple transformation function to project the points into three dimensions. As shown in the figure, after we project the points to the third dimension, we can easily draw a simple plane to separate the two sets of points. It’s just like how objects placed at different heights on the ground will look the same if you look from the top. This is called Kernel Trick. Kernel refers to the way in which we transform the data points to a higher dimension. SVMs use this extensively to get the most optimal decision surface. Given a set of points, SVMs will project these points to a higher dimension and obtain a simple hyperplane to separate the two sets of points.

Consider the image shown here. If you are asked to draw a line to separate the red points from the blue points, you will draw a line as shown in the figure. But we don’t want to draw a curvy line anymore, hence we need to transform them to a higher dimension so that it is easy to separate them. We use a simple transformation function to project the points into three dimensions. As shown in the figure, after we project the points to the third dimension, we can easily draw a simple plane to separate the two sets of points. It’s just like how objects placed at different heights on the ground will look the same if you look from the top. This is called Kernel Trick. Kernel refers to the way in which we transform the data points to a higher dimension. SVMs use this extensively to get the most optimal decision surface. Given a set of points, SVMs will project these points to a higher dimension and obtain a simple hyperplane to separate the two sets of points.

Up until now, we have just talked about two classes. This can be easily generalized for any number of classes. Given a set of points which may belong to ‘n’ possible classes, we can get the appropriate number of decision surfaces to separate them.

SVMs are non-probabilistic classifiers which provide a new approach to the problem of pattern recognition. They are very much different from approaches such as Artificial Neural Networks. SVM training always finds a global optimum, and their simple geometric interpretation provides valuable insight into the future research directions. SVMs are largely characterized by the choice of their kernels. The current research in SVMs is mainly concerned with the choice of the kernel functions along with reducing the amount of data needed for training. SVMs have a very strong mathematical foundation and they provide excellent results. Once the computational issues are resolved, they will be used in many more areas.

————————————————————————————————-

Lovely explanation!